Zeno's Paradox 3.0: A Paradox of Change

Achilles and the Tortoise, or John Henry and the Large Language Models (LLMs)

Achilles, John Henry, and Sam A.

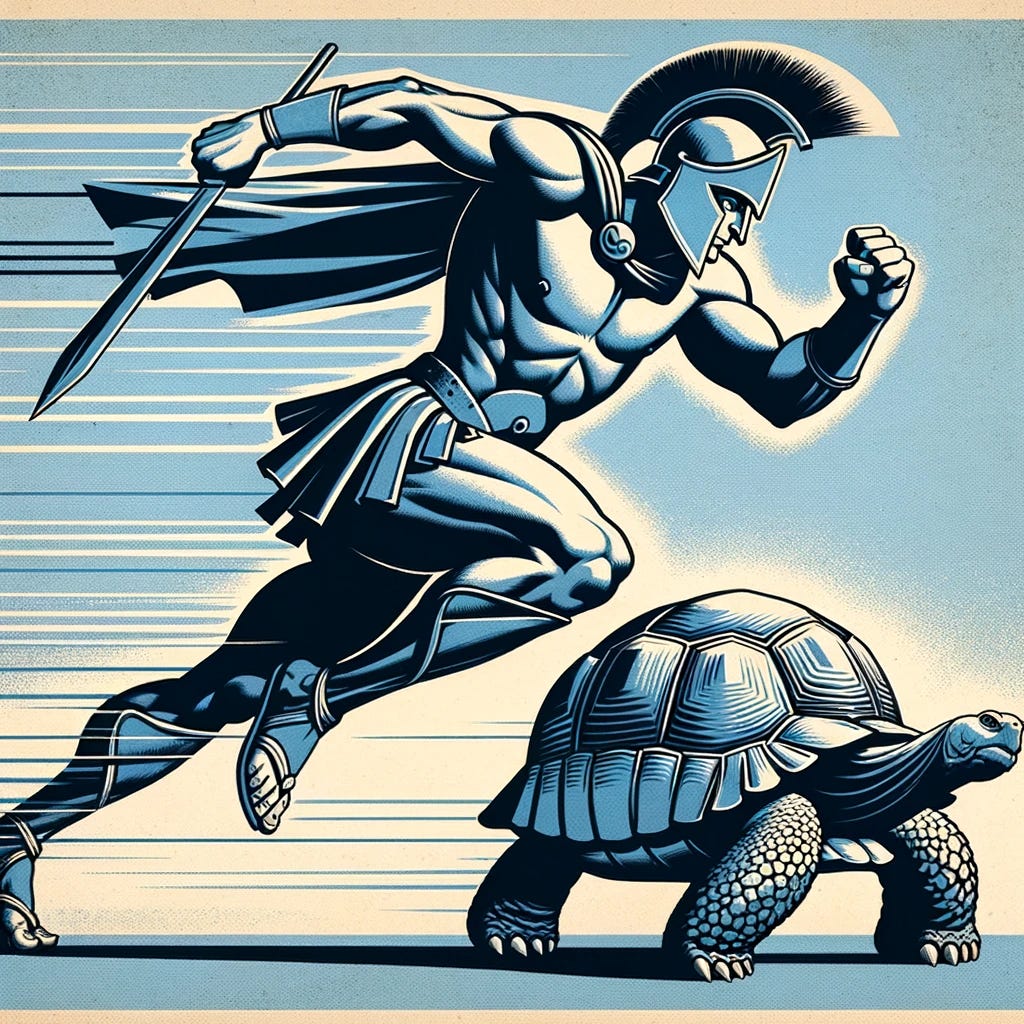

According to the original Zeno’s Paradoxes, motion and change are impossible. One illustration of the Paradox is the Story of Achilles and the Tortoise. This story is meant to provoke discussion around the continued improvement of ever larger LLMs.

Achilles and the Tortoise…

In “Achilles and the Tortoise,” the swift warrior Achilles—from Homer’s Greek epic The Illiad and other Greek mythology—competes in a race with a slow tortoise. Given a head start due to its disadvantage, the Tortoise starts the race ahead and always remains ahead. Before Achilles can reach the Tortoise, he must close half the distance to between himself and the Tortoise, and meanwhile the Tortoise as advanced a little further; before Achilles goes halfway he must go half that distance again; and so on infinitely. Since the distance can always be divided in half, it is said that Achilles will never reach the Tortoise, let alone pass it.

…with a twist

Let’s suppose that Achilles has received another blessing with a twist—like his immortality except for the heel—after giving the Tortoise a head start, Achilles can instantaneously teleport one pace ahead of the Tortoise after each step the Tortoise takes. But the twist is that Achilles is unable to take a step forward on his own (perhaps he has tendonitis). If the Tortoise is at n, then Achilles is always at n+1. When would Achilles cross the finish line? Supposing the Tortoise catches on to the trick: never. Why would the Tortoise continue the race once the outcome became clear?

John Henry and the Copycat Steam Engine

Instead of Achilles and a Tortoise, let’s now say that John Henry is facing off against a new Copycat Steam Engine. For every spike John Henry drives, the Cooycat Steam Engine drives five. It is clearly better than John Henry and easily builds a lead, but for some reason it stops when John Henry stops. When the Copycat Steam Engine seems defeated, John Henry wipes his brow, picks up his sledgehammer, and gets back to work. The Steam Engine resumes working and builds on its lead. When will the section of rail be completed? Perhaps never. Unlike the Tortoise, however, John Henry may still have another goal. Perhaps the Railroad pays John Henry not to drive railroad spikes for its own sake, but as “training data” to run the Copycat Steam Engine.

SPIN or Standstill?

On the one hand, according to OpenAI’s CEO, Sam Altman, “I think we're at the end of the era where it's going to be these, like, giant, giant models…We'll make them better in other ways.”

On the other hand, this recent paper has caused some observers to speculate that LLMs may be able to do Self-Play Fine-Tuning (SPIN) to improve themselves without additional human annotators.

On the third (robot?) hand, can an LLM train itself without amplifying its own hallucinations and self-data poison?

I had originally intended to title this post “Zeno’s Paradox 2.0,” but figured I’d Google it before publishing to see if anyone had already said it. I ended up with a very fitting quote from the aptly named N+1: “Zeno’s Paradox 2.0: delete your sentences as you read their approximations elsewhere.”